CMO–CRO alignment often looks fine on paper but breaks in execution. This article shows how AI-driven revenue signals enable earlier correction and joint ownership of pipeline quality, velocity, and outcomes.

Marketing can hit its numbers. Sales can hit their numbers. And the organisation can still miss growth targets, carry a bloated pipeline, and spend every quarter arguing about quality instead of fixing it. For years, this was tolerated because the lag between decision and outcome was long enough to blur responsibility. But that tolerance is disappearing.

With AI, demand decisions surface their consequences much faster. Poor targeting shows up as stalled deals. Weak intent shows up as slow velocity. Pipeline doesn’t just grow or shrink; it survives or collapses in patterns that are hard to ignore. The familiar debates don’t disappear; they simply become less defensible - and this is where the CMO–CRO relationship changes, because AI removes the ambiguity that alignment relied on. When both leaders are looking at the same revenue signals, coordination stops being the problem. Ownership becomes the issue.

What follows is a shift in how revenue leadership works when outcomes can be traced, assumptions tested, and weak signals exposed early. Some CMO–CRO partnerships adapt quickly. Others discover that what they called alignment was really just distance.

Why the CMO–CRO Relationship Was Structurally Misaligned

Alignment was measured on activity, not outcomes

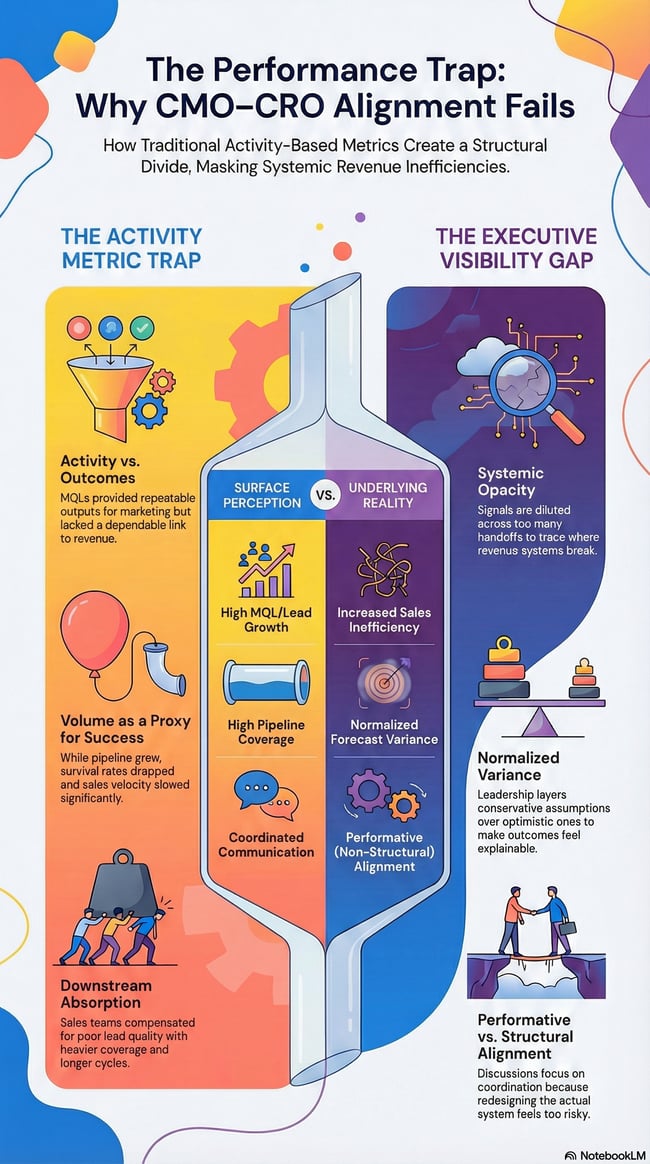

For a long time, alignment between marketing and sales was built around activity because activity was the only thing marketing could reliably control. MQLs solved a reporting problem: they gave marketing a visible, repeatable output that could be planned, forecast, and improved quarter over quarter. What they never provided was a dependable link to revenue outcomes.

Lead scoring made this gap easier to live with, not smaller. Buyer intent was translated into internal scoring models that reflected how marketing thought demand should behave, not how deals actually progressed. Sales teams learned quickly which leads were worth pursuing and which were not, but that insight rarely travelled back upstream in a way that changed how demand was created. The scoring system stayed intact; sales adapted around it.

Over time, volume became a proxy for effectiveness. Marketing could demonstrate growth by producing more leads. Sales could demonstrate competence by filtering harder and closing what remained. Both sides could “win” inside their own metrics while the overall revenue system became less efficient. Pipeline grew, but survival rates dropped. Velocity slowed. The cost of poor demand quality didn’t show up as a marketing problem — it showed up as sales inefficiency.

That inefficiency was largely absorbed. Sales teams are compensated with better qualification, heavier coverage, and longer cycles. From an executive perspective, it looked like execution friction rather than a demand problem. The system rewarded downstream correction instead of upstream improvement, and alignment remained performative rather than structural.

Executives inherited a system that hid cause and effect

TAt the leadership level, the real issue was opacity. When revenue was missed, there was no reliable way to trace where the system broke. Was it targeting? Timing? Deal quality? Sales execution? By the time the miss was visible, the signal was already diluted across too many stages and handoffs to act on with confidence.

Forecast variance became normalised as a result. Pipeline coverage was increased to compensate. Conservative assumptions were layered on top of optimistic ones. None of this fixed the underlying issue, but it made outcomes feel explainable enough to move on. Alignment discussions focused on coordination and communication because redesigning the system felt risky and abstract.

See also: How AI Improves RevOps Alignment Across Revenue Teams

What AI Exposes That Marketing and Sales Could Previously Ignore

AI collapses time between decision and consequence

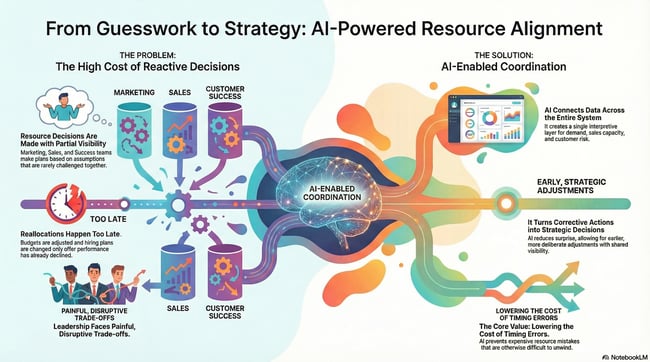

One of the biggest structural shifts AI introduces is speed - in feedback, not execution though. Historically, marketing decisions took months to show their full impact on revenue. By the time poor targeting or weak intent became visible, the organisation had already moved on to the next campaign cycle.

That buffer is shrinking fast:

-

Campaign and targeting decisions can now be traced to pipeline survival rates within weeks. You don’t need to wait for closed revenue to see whether demand is healthy. You can see it in how deals progress, how long they stall, and which segments quietly drop out before they ever reach a serious buying stage.

-

Deal quality decay becomes visible long before close. Weak intent doesn’t just show up as lost deals but also as slower movement, heavier sales effort, and inconsistent progression across stages. Velocity becomes an early warning system, not just an operational metric.

-

Poor targeting, in particular, is harder to ignore. Instead of debating conversion rates in isolation, leadership teams see where momentum disappears. When velocity drops consistently for certain segments or campaigns, the issue is no longer abstract. The system is signalling friction, and it’s doing so early enough to act.

Pattern recognition replaces narrative defence

In most revenue organisations, explanations fill the gaps that data cannot. Missed deals are attributed to timing, market conditions, individual execution, or “edge cases.” At a small scale, those explanations often hold. At scale, they become a way to avoid confronting patterns.

AI changes this by surfacing repeatable loss patterns that humans tend to rationalise away. When the same deal shapes, segments, or entry points consistently underperform, they stop looking like exceptions. Conversations shift from explanation to intervention. Instead of asking why something happened after the fact, teams are forced to ask what needs to change while there is still time to change it. This is uncomfortable - especially for leaders used to defending performance within their own remit - but it is also where progress happens.

Visibility forces ownership upward

As cause and effect become clearer, it becomes harder to push responsibility down into teams or tools. When weak demand signals are visible early, leadership can no longer plausibly claim that outcomes were unknowable. The question shifts from “who owns this metric?” to “who owns this outcome?”

That shift matters for the CMO–CRO relationship. Shared visibility removes the safety net that allowed misalignment to persist. It doesn’t assign blame automatically, but it does remove ambiguity - and ambiguity is what allowed both sides to operate comfortably without shared ownership of results.

|

See What Your Revenue Signals Are Actually Telling You When marketing and sales look at different signals, accountability blurs. Start with the metrics that show how revenue really behaves, not just how activity is reported. Book a free strategy session today. |

Moving From MQLs to Revenue Metrics That Drive Better Decisions

Why functional KPIs fail in an AI-driven model

Functional KPIs were never designed to optimise the revenue system as a whole. They were designed to optimise local performance. Marketing metrics rewarded volume and efficiency at the top of the funnel. Sales metrics rewarded closing skill and quota attainment at the bottom. In isolation, both make sense. Together, they create blind spots.

High MQL volume can coexist with poor revenue efficiency, and often does. Pipeline can look healthy while deal survival rates quietly deteriorate. Closed revenue, meanwhile, hides structural demand issues until it’s too late to correct them. By the time outcomes are clear, the inputs that caused them are already baked in.

AI exposes these limitations by making system-level performance visible. When metrics are no longer delayed or siloed, optimising locally becomes obviously suboptimal. The organisation needs signals that reflect how revenue actually flows, not how functions report success.

Revenue metrics that change behaviour, not just dashboards

What replaces MQLs is not a single “better” metric, but a set of shared signals that force different decisions.

-

Pipeline quality becomes a forward-looking indicator of revenue health, showing not just how much demand exists, but how viable it is.

-

SQL-to-close rates stop being interpreted purely as sales execution and start functioning as a test of demand validity upstream.

-

Velocity emerges as the clearest signal of friction across the system — where deals slow, effort increases, and confidence erodes.

Loss data becomes more valuable than wins

Wins tell you what worked once. Losses tell you what fails repeatedly.

In an AI-driven model, loss data becomes one of the most valuable strategic inputs. Loss reasons reveal misalignment earlier than win analysis ever could. They show where demand enters the system under false assumptions, where deals stall predictably, and where sales effort is consistently wasted. AI makes this data usable by exposing patterns across segments, messaging, deal size, and entry points - patterns that are easy to miss when losses are reviewed one by one.

See also: AI Marketing Metrics That Matter to B2B Executives

How Shared Metrics Rebuild Trust Between Marketing and Sales Leaders

Why trust breaks down late in the quarter

Trust between marketing and sales leadership rarely erodes in abstract strategy discussions. It breaks under pressure and typically late in the quarter, when outcomes are already constrained and explanations matter more than solutions.

Surprises force justification. Justification turns into defensiveness. And defensiveness quickly becomes blame. At that point, collaboration gives way to self-protection. Marketing explains why demand was sound. Sales explains why execution was harder than expected. Neither side is wrong in isolation, but the system has already failed.

How AI reduces end-of-quarter volatility

AI changes this dynamic by shifting signal detection forward. Instead of discovering demand issues at the point of close, leadership teams start seeing them earlier in how deals behave.

Weak demand shows up as patterns:

-

stalled progression across specific segments

-

uneven velocity that doesn’t correct itself

-

increasing sales effort without corresponding movement

That earlier visibility matters because it creates optionality. Corrections can happen while the pipeline is still fluid. Targeting can be refined. Messaging adjusted. Sales focus rebalanced.

Trust emerges as a byproduct of predictability

When shared metrics reduce volatility, conversations move earlier in the cycle. Disagreements still happen, but they happen when action is possible. Over time, trust shifts away from personal confidence in individuals and toward confidence in the operating model itself.

Common Failure Modes in AI-Driven Revenue Alignment

Automating the wrong definitions

If the organisation feeds AI the same functional KPIs it used before, the output will look more precise without being more useful. Common warning signs show up quickly:

-

MQL definitions stay unchanged, just modelled more tightly

-

attribution is still treated as causality

-

dashboards improve, decisions don’t

The risk here is false confidence, not bad data. Once flawed definitions are automated, they become harder to challenge because they appear objective.

Treating AI as insight, not intervention

Many teams stop at visibility. They can see stalled velocity. They can identify weak segments. They can explain performance with more nuance than before. What doesn’t change is when they act.

The pattern usually looks like this:

AI surfaces an issue → leadership agrees it’s a problem →action waits for the next planning cycle

Knowing more without acting sooner doesn’t change outcomes. AI only creates leverage when it shortens the distance between signal and intervention. Otherwise, it becomes a better way of narrating results after the fact.

Delegating ownership away from the executive level

Revenue alignment collapses quickly when AI becomes “a RevOps thing.” Tools get implemented. Models get tuned. Reports circulate. And executive ownership quietly recedes. When CMOs and CROs disengage from how signals are interpreted and acted on, the organisation defaults back to functional optimisation.

|

Fix Revenue Issues While They’re Still Cheap When pipeline problems surface early, they’re easier to fix. Waiting until close turns diagnosis into justification — and correction into escalation. Book a free strategy session today. |

What Strong CMO–CRO Partnerships Do Differently Today

They optimise for learning speed, not certainty

Strong CMO–CRO partnerships don’t try to eliminate uncertainty - they assume it’s unavoidable and design around how quickly they can correct. Instead of debating forecasts to death, they focus on how fast the system tells them they’re wrong. AI is used less as a prediction engine and more as a testing mechanism. Assumptions about segments, messaging, deal shape, or timing are treated as hypotheses, not truths.

Two things follow from this mindset:

-

faster correction consistently beats perfect prediction

-

confidence comes from feedback loops, not conviction

When learning speed is the goal, disagreement becomes cheaper. Leaders don’t need to defend being “right” - they need to spot signals early and adjust. That alone removes a lot of the friction that traditionally sits between marketing and revenue leadership.

They treat revenue as a system, not a sequence

Weaker partnerships still think about revenue as a handoff: marketing generates, sales converts, revenue appears at the end. Strong partnerships treat revenue as a system with shared inputs, shared constraints, and shared failure points. Demand, conversion, and velocity are viewed together, not in isolation. Pipeline quality is discussed alongside sales effort. Messaging decisions are evaluated against downstream behaviour, not just top-of-funnel response.

This translates to:

-

fewer handoffs and fewer artificial stage boundaries

-

more attention to flow than to volume

-

earlier joint decisions about where not to invest

See also: What Is Pipeline Velocity Optimization and Why It Matters for Revenue Growth

From Alignment to Joint Revenue Accountability

For years, the executive question has been whether marketing and sales are aligned. That question is now outdated. The more relevant question is this:

Can we explain, predict, and correct our revenue engine - together?

AI makes that question unavoidable. When cause and effect are clearer, alignment alone stops being sufficient. Coordination without ownership doesn’t hold up under scrutiny. What’s required instead is joint accountability for how revenue is created, not just how it’s reported. This doesn’t mean CMOs become sales leaders or CROs become marketers. It means both leaders accept responsibility for the performance of the system between them.

Key Takeaways

-

Misalignment was never about intent. Marketing and sales operated rationally inside a system that rewarded activity over outcomes and delayed accountability long enough for problems to be explained away.

-

AI doesn’t improve alignment — it removes cover. When the gap between decision and consequence shrinks, ambiguity disappears. What used to be a debate becomes observable behaviour in the pipeline.

-

Shared metrics matter because they change timing, not because they create consensus. Seeing weak signals earlier is what reduces tension at the end of the quarter. Trust improves when surprises disappear.

-

Pipeline quality and velocity are leadership signals, not operational metrics. When these degrade, the issue is rarely execution alone. It’s usually demand assumptions surfacing downstream.

-

Strong CMO–CRO partnerships optimise for correction speed, not forecast confidence. Being able to change course early is more valuable than being right late.

-

The real shift is ownership of the space between marketing and sales. Alignment stops being the goal once both leaders are accountable for how the revenue engine behaves end to end.

|

Start With a Clearer View of Your Revenue Engine Most revenue models carry forward assumptions long after conditions change. A structured review can help identify which ones still hold—and which quietly introduce risk. Book a free strategy session with our team. |

FAQs

Does improving CMO–CRO alignment require a full RevOps overhaul?

Not necessarily. Many organisations see meaningful improvement without a structural overhaul by changing what leadership reviews together and when. The biggest gains usually come from introducing shared revenue signals earlier in the quarter and using them to make real trade-offs, rather than from reorganising teams or implementing new layers of process.

How should boards evaluate CMO–CRO effectiveness in an AI-driven model?

Boards should look beyond lead volume and quarterly revenue snapshots and focus on predictability and correction speed. Questions about pipeline survival, velocity trends, and how quickly leadership responds to weak signals tend to reveal far more about revenue health than traditional performance metrics.

What role does RevOps play once CMOs and CROs share accountability?

RevOps becomes an enabler, not an owner. Its role is to ensure data consistency, surface signals clearly, and support faster decision-making and not to arbitrate between marketing and sales. When RevOps is asked to “fix alignment,” it usually signals that executive ownership is missing.

Can this model work in organisations with long or complex sales cycles?

Yes - in fact, longer sales cycles often benefit more. Earlier visibility into deal progression, effort-to-movement ratios, and segment-level velocity helps leadership identify demand quality issues months before revenue impact would normally surface. The longer the cycle, the higher the cost of late correction.

What’s the biggest risk when introducing AI into revenue leadership discussions?

Overconfidence. AI can make weak assumptions look precise and give leaders a false sense of control. The organisations that benefit most treat AI outputs as prompts for action and testing, not as answers. Governance, definition discipline, and executive involvement matter more than model sophistication.